A new project called “Brain2Music” from Google and Osaka University in Japan could turn brain activity into music using AI.

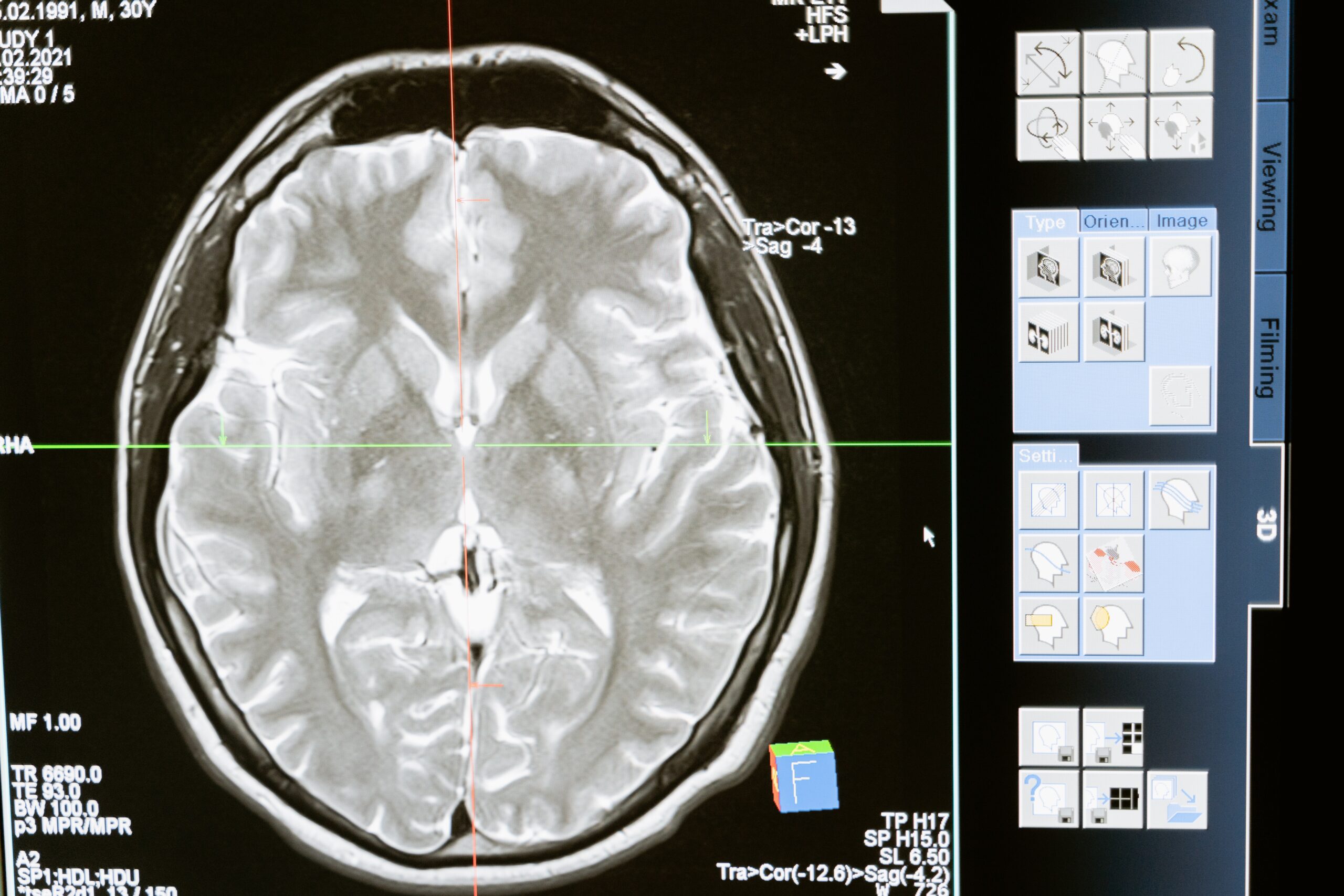

The technology came from a research project that took volunteers inside an fMRI scanner and played over 500 tracks across 10 music styles. The brain activity was then measured and used to create images that could be put into Google’s AI music generator, Music LM.

The music created by the AI generator was then similar to those played at the time, linking brain waves to musical stimuli.

Research found that “When a human and MusicLM are exposed to the same music, the internal representations of MusicLM are correlated with brain activity in certain regions.”

These findings could help scientists to translate thoughts into music, leaving behind the laborious days of spending hours on Ableton or FL Studio constructing beats.

But the study is also aware that individual brain activity varies from person to person and this music came from an outside stimulus rather than imagination-developed thought activity.

You can check out the full paper on Google and Osaka University’s “Brain2Music” study here.

All images stock photos from Pexels